Artificial Intelligence: Advancements and Applications

Boldly embracing the future, Artificial Intelligence: Advancements and Applications stand at the forefront of technological innovation, poised to revolutionize industries and enhance daily lives.

In the realm of technological marvels, Artificial Intelligence (AI) stands as a crown jewel, continually evolving and pushing boundaries. AI encompasses a vast spectrum of advancements and applications, each a testament to human ingenuity. Delving into this world of innovation, we explore the mesmerizing facets of AI, uncovering its potential, challenges, and the profound impact it leaves on our world.

Understanding Artificial Intelligence

Artificial Intelligence (AI) is a field of computer science that focuses on the development of machines that can perform tasks that would normally require human intelligence. AI systems are designed to learn, reason, and make decisions based on data.

Types of AI: There are two main types of AI: Narrow AI and General AI. Narrow AI, also known as Weak AI, is designed to perform a specific task, such as playing chess or recognizing faces. General AI, also known as Strong AI, is designed to perform any intellectual task that a human can do.

Overall, AI is a rapidly growing field with a wide range of applications. As AI technology continues to advance, it has the potential to revolutionize the way we live and work.

History of Artificial Intelligence

Artificial Intelligence (AI) has been around for quite some time, although the concept of AI has evolved over the years. The history of AI can be traced back to the 1950s when researchers began exploring the possibility of creating machines that could think and learn like humans.

Early Days of AI: In 1956, John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon organized the Dartmouth Conference, which is widely considered the birthplace of AI. At the conference, the researchers proposed that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

The early days of AI were marked by significant breakthroughs in the field. In the late 1950s and early 1960s, researchers developed programs that could solve algebra and geometry problems, play checkers, and even prove mathematical theorems.

AI Winter: However, progress in AI was not always smooth. The 1970s and 1980s were marked by a period known as the “AI winter,” where funding for AI research was cut due to a lack of tangible results. This period lasted until the late 1990s when advances in computing power, the availability of large datasets, and the development of new algorithms reignited interest in AI.

Modern AI: AI is a rapidly growing field with numerous applications in various industries, including healthcare, finance, and transportation. Machine learning, a subset of AI, has led to significant advancements in areas such as speech recognition, natural language processing, and image recognition.

In recent years, there has been a significant focus on developing AI systems that can learn from data and improve their performance over time. This approach, known as deep learning, has led to significant breakthroughs in areas such as computer vision and autonomous driving.

Overall, the history of AI has been marked by significant advances and setbacks. However, the field continues to evolve, and AI is poised to play an increasingly important role in our lives in the years to come.

Types of Artificial Intelligence

Artificial Intelligence can be broadly classified into three main categories based on their capabilities: Weak AI, Strong AI, and Superintelligent AI.

Weak AI: Weak AI, also known as Narrow AI, is designed to perform a specific task or set of tasks. These AI systems are not capable of thinking or learning beyond their programmed tasks. Examples of Weak AI include virtual personal assistants like Siri and Alexa, chatbots, and image recognition software.

Strong AI: Strong AI, also known as Artificial General Intelligence (AGI), is designed to perform any intellectual task that a human can do. These AI systems are capable of reasoning, learning, and problem-solving beyond their programming. Strong AI is still a theoretical concept and has not yet been achieved.

Superintelligent AI: Spriteliest AI, also known as Artificial Superintelligence (ASI), is an AI system that surpasses human intelligence in all aspects. These AI systems are capable of self-improvement and can rapidly increase their own intelligence beyond human comprehension. Spriteliest AI is still a hypothetical concept and its development raises concerns about the safety and control of such systems.

It’s means, AI can be classified into three main categories: Weak AI, Strong AI, and Spriteliest AI. While Weak AI is currently the most common form of AI, Strong AI and Spriteliest AI are still theoretical concepts that have not yet been fully realized.

Applications of Artificial Intelligence

Artificial Intelligence (AI) is a versatile technology that has the potential to revolutionize various industries. Here are some of the applications of AI in different sectors:

Healthcare: AI is being used in healthcare to improve patient outcomes, reduce costs, and enhance the overall quality of care. Some of the applications of AI in healthcare include:

- Medical imaging: AI-powered medical imaging tools can help radiologists detect and diagnose diseases more accurately and quickly.

- Drug discovery: AI can assist in drug discovery by analyzing large amounts of data to identify potential drug candidates.

- Virtual assistants: Virtual assistants powered by AI can help patients manage their health by providing personalized health advice and reminders.

Finance: AI is transforming the financial industry by enabling companies to make better decisions, reduce costs, and improve customer experiences. Some of the applications of AI in finance include:

- Fraud detection: AI-powered fraud detection systems can analyze large amounts of data to detect fraudulent transactions in real-time.

- Risk management: AI can help financial institutions manage risk more effectively by analyzing data to identify potential risks and predict market trends.

- Chatbots: Chatbots powered by AI can improve customer experiences by providing personalized support and assistance.

Transportation: AI is revolutionizing transportation by enabling companies to develop autonomous vehicles and improve logistics operations. Some of the applications of AI in transportation include:

- Autonomous vehicles: AI-powered autonomous vehicles can improve road safety and reduce traffic congestion by eliminating human error.

- Logistics optimization: AI can help logistics companies optimize their operations by analyzing data to identify the most efficient routes and schedules.

- Predictive maintenance: AI can assist in predictive maintenance by analyzing data to identify potential maintenance issues before they become major problems.

Education: AI is being used in education to improve student outcomes, personalize learning experiences, and streamline administrative tasks. Some of the applications of AI in education include:

- Personalized learning: AI-powered personalized learning systems can adapt to the needs of individual students and provide personalized feedback and support.

- Automated grading: AI can assist in automated grading by analyzing student work and providing feedback to teachers.

- Administrative tasks: AI can help streamline administrative tasks such as scheduling and record-keeping, freeing up teachers to focus on teaching.

Artificial Intelligence Technologies

Artificial Intelligence (AI) is a rapidly growing field that encompasses a wide range of technologies. Some of the most popular AI technologies are:

Machine Learning: Machine learning is a type of AI that allows machines to learn from data without being explicitly programmed. It involves creating algorithms that can learn from and make predictions on data. Machine learning is used in a variety of applications, including image and speech recognition, fraud detection, and recommendation systems. Some popular machine learning frameworks include TensorFlow, PyTorch, and Scikit-learn.

Natural Language Processing: Natural Language Processing (NLP) is a subset of AI that focuses on the interaction between computers and human language. It involves teaching machines to understand and interpret human language, including spoken and written language. NLP is used in a variety of applications, including chatbots, language translation, and sentiment analysis. Some popular NLP frameworks include SpaCy, NLTK, and Genism.

Robotics: Robotics is a field of AI that involves the design, construction, and operation of robots. It combines AI with mechanical engineering and electronics to create machines that can perform tasks autonomously. Robotics is used in a variety of applications, including manufacturing, healthcare, and space exploration. Some popular robotics frameworks include ROS, Gazebo, and V-REP.

Speech Recognition: Speech recognition is a type of AI that involves teaching machines to understand and interpret spoken language. It involves creating algorithms that can recognize and transcribe speech into text. Speech recognition is used in a variety of applications, including virtual assistants, dictation software, and voice-controlled devices. Some popular speech recognition frameworks include Kaldi, Sphinx, and Google Cloud Speech-to-Text.

Now, These are some of the most popular AI technologies that are used today. Each technology has its own unique strengths and weaknesses, and they are used in a variety of applications. As AI continues to evolve, we can expect to see even more advanced technologies being developed in the future.

Ethics and Artificial Intelligence

As artificial intelligence (AI) continues to advance, it raises significant ethical concerns. AI is a technology that can learn and make decisions that would typically require human intelligence. Therefore, as AI becomes more prevalent in our daily lives, it is essential to consider the ethical implications of its use.

Bias and Discrimination: One of the most significant ethical concerns surrounding AI is the potential for bias and discrimination. AI algorithms are only as unbiased as the data they are trained on. If the data used to train AI algorithms are biased, the algorithms themselves will be biased. This can lead to discrimination against certain groups of people, such as women and people of color.

Privacy: Another ethical concern surrounding AI is privacy. As AI algorithms become more sophisticated, they can collect and analyze vast amounts of data about individuals. This can lead to significant privacy concerns, as individuals may not want their personal data to be collected or used without their consent.

Responsibility and Accountability: As AI becomes more prevalent in our daily lives, it is essential to consider who is responsible and accountable for the decisions made by AI algorithms. In many cases, it may be difficult to determine who is responsible for the actions of an AI algorithm, as the algorithm may have learned and made decisions independently.

As AI becomes more prevalent in our daily lives, it is essential to consider the ethical implications of its use. Bias and discrimination, privacy concerns, and responsibility and accountability are just a few of the ethical concerns surrounding AI. It is crucial to address these concerns proactively to ensure that AI is used in a responsible and ethical manner.

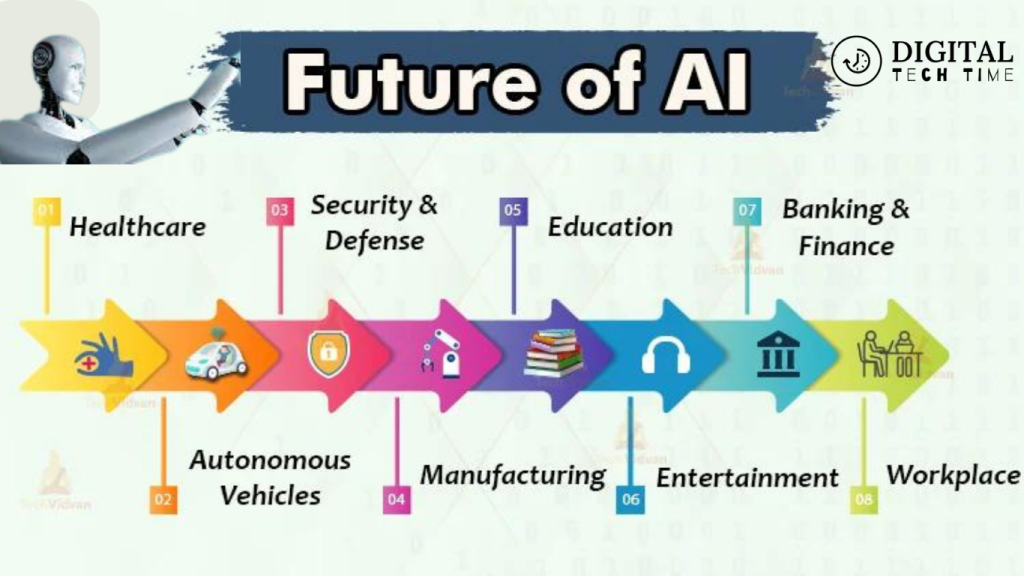

Future of Artificial Intelligence

Artificial Intelligence (AI) has come a long way since its inception, and it is only going to become more advanced in the future. AI has the potential to revolutionize every aspect of human life, from healthcare to transportation to entertainment. Here are a few predictions for the future of AI

Human-level AI: Many experts predict that human-level AI could exist within the next 100 years. According to a World Economic Forum report, 90% of AI experts believe that human-level AI could exist within this timeframe. Human-level AI is defined as AI that can perform any intellectual task that a human can. This could have a profound impact on society, as it would mean that machines could potentially replace humans in many jobs.

Increased Automation: As AI becomes more advanced, it will become increasingly capable of automating tasks that are currently performed by humans. This could lead to significant changes in the job market, as many jobs that are currently performed by humans could be automated. However, it could also lead to increased productivity and efficiency, as machines are often able to perform tasks more quickly and accurately than humans.

Improved Healthcare: AI has the potential to revolutionize healthcare by improving diagnosis, treatment, and patient outcomes. AI can analyze large amounts of medical data and identify patterns that humans may not be able to see. This could lead to earlier diagnosis of diseases and more personalized treatment plans. Additionally, AI-powered robots could assist doctors and nurses in performing surgeries and other medical procedures.

Enhanced Transportation: AI has the potential to improve transportation in a number of ways. Self-driving cars, for example, could reduce the number of accidents caused by human error. Additionally, AI could be used to optimize traffic flow and reduce congestion. This could lead to faster and more efficient transportation for everyone.

Overall, the future of AI is bright, with the potential to revolutionize every aspect of human life. While there are concerns about the impact that AI could have on jobs and society, there is no doubt that AI will continue to play an increasingly important role in our lives in the years to come.

Challenges in Artificial Intelligence

Artificial Intelligence (AI) is revolutionizing various industries and fields, from healthcare to finance to transportation. However, with the benefits of AI come several challenges that developers and researchers need to address. In this section, we will discuss some of the most common challenges in AI.

Computing Power: One of the most significant challenges in AI is computing power. AI algorithms require a tremendous amount of power to process vast amounts of data and perform complex tasks. This high demand for power can be costly, both financially and environmentally. To address this challenge, researchers are exploring new ways to optimize algorithms and hardware to reduce power consumption.

Data Quality and Bias: AI algorithms rely on vast amounts of data to learn and make decisions. However, the quality of the data used can significantly impact the accuracy and reliability of the AI system. Biases can also be introduced into the data, leading to biased AI conclusions. To address this challenge, researchers need to ensure that the data used is of high quality and free from bias.

Explainability and Transparency: AI systems can be complex, making it difficult to understand how they arrive at specific decisions. This lack of explainability and transparency can be problematic, especially in high-stakes applications such as healthcare and finance. To address this challenge, researchers are developing methods to make AI systems more transparent and explainable.

Ethical Considerations: As AI becomes more prevalent, ethical considerations become increasingly important. AI systems can have significant impacts on society, and developers need to consider the ethical implications of their work. For example, AI algorithms can perpetuate biases or infringe on personal privacy. To address this challenge, researchers need to consider the ethical implications of their work and develop ethical guidelines for AI development and deployment.

Human-AI Collaboration: AI systems are not intended to replace humans but to work alongside them. However, human-AI collaboration can be challenging, as AI systems can be difficult to understand and interact with. To address this challenge, researchers are developing methods to improve human-AI collaboration, such as natural language processing and user-friendly interfaces.

AI has the potential to revolutionize various industries and fields. However, developers and researchers need to address the challenges in AI to ensure that these systems are accurate, reliable, and ethical.

Artificial Intelligence in Popular Culture

Artificial Intelligence (AI) has been a popular theme in science fiction movies, television shows, and books for decades. From the menacing HAL 9000 in “2001: A Space Odyssey” to the lovable WALL-E in the eponymous Pixar movie, AI has been portrayed in a variety of ways, shaping public perceptions of the technology.

One of the earliest depictions of AI in popular culture was in Mary Shelley’s “Frankenstein,” published in 1818. The novel tells the story of a scientist who creates a creature out of dead body parts and brings it to life. While not explicitly an AI, the creature is often regarded as a precursor to the idea of artificial life and intelligence.

In the 20th century, AI became a more prominent theme in popular culture. The 1968 movie “2001: A Space Odyssey” featured the AI system HAL 9000, which eventually turns on the human crew. The Terminator franchise, which began in 1984, features a dystopian future where intelligent machines have taken over the world.

More recently, AI has been portrayed in a more positive light in popular culture. The 2014 movie “Her” tells the story of a man who falls in love with an AI assistant named Samantha. The movie explores the concept of human-AI relationships and the potential for AI to be more than just a tool.

Overall, the portrayal of AI in popular culture has had a significant impact on public perceptions of the technology. While some depictions have been negative, others have been more optimistic, showing the potential for AI to improve our lives in a variety of ways.

Artificial Intelligence, AI, advancements, applications, machine learning, deep learning, healthcare, automotive, ethics

For more information about Artificial Intelligence and related topics, feel free to explore my profile here.

Frequently Asked Questions

How is artificial intelligence used in chatbots?

Artificial intelligence is used in chatbots to enable them to understand natural language and respond to users in a human-like manner. Chatbots use natural language processing (NLP) techniques to analyze and interpret user inputs, which allows them to provide relevant responses. AI-powered chatbots can also learn from user interactions, which enables them to improve their responses over time.

Can machine learning be considered a subset of artificial intelligence?

Yes, machine learning can be considered a subset of artificial intelligence. Machine learning is a technique that enables machines to learn from data and improve their performance without being explicitly programmed. It is a subset of AI because it uses algorithms and statistical models to enable machines to learn and improve their performance.

What are the different types of artificial intelligence?

There are three different types of artificial intelligence: narrow or weak AI, general or strong AI, and super AI. Narrow AI is designed to perform a specific task, such as playing chess or recognizing speech. General AI is capable of performing any intellectual task that a human can do. Super AI is hypothetical and refers to AI that is more intelligent than humans.

What are the advantages of using artificial intelligence in healthcare?

Artificial intelligence has several advantages in healthcare, including improved accuracy in diagnosis, faster and more efficient drug discovery, and improved patient outcomes. AI-powered diagnostic tools can analyze large amounts of medical data and provide accurate diagnoses, which can improve patient outcomes. AI can also help identify new drug targets and speed up the drug discovery process.

How does artificial intelligence impact the job market?

Artificial intelligence has the potential to automate many jobs, which could lead to job displacement. However, it can also create new jobs in AI development, maintenance, and oversight. AI can also improve productivity and efficiency, which can lead to economic growth and job creation.

What ethical considerations should be taken into account when developing artificial intelligence?

Developers of artificial intelligence should consider ethical considerations such as privacy, bias, and transparency. AI systems should be designed to protect user privacy and prevent the misuse of personal data. Developers should also ensure that AI systems are free from bias and discrimination. Finally, AI systems should be transparent, and their decision-making processes should be explainable to users.