Exploring the Power of Binary Neural Networks (BNNs)

In the ever-evolving landscape of artificial intelligence, the pursuit of efficient and robust neural network architectures has given rise to an intriguing paradigm—Binary Neural Networks (BNNs). As the name suggests, these networks operate on binary values, representing weights and activations as 0 or 1. This unique approach has garnered significant attention due to its potential for reduced computational complexity, memory footprint, and energy efficiency. It is a promising avenue for deploying deep learning models on resource-constrained devices.

In this comprehensive review, we delve into the intricacies of BNNs, exploring their underlying principles, advantages, and applications. By examining the latest research and advancements, we aim to provide a holistic understanding of this emerging technology and its potential impact on artificial intelligence.

Table of Contents

Understanding the Basics of BNNs

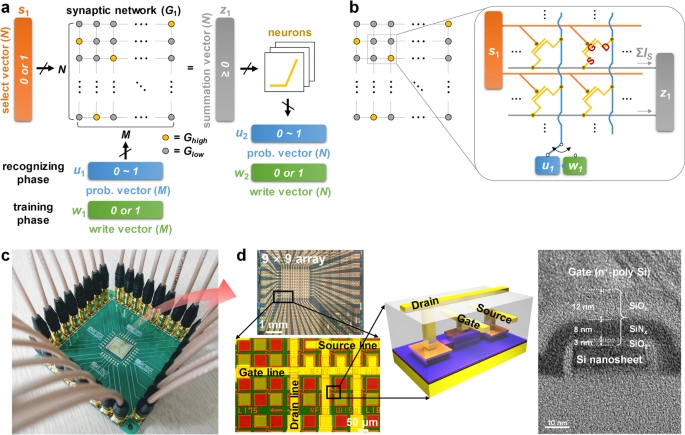

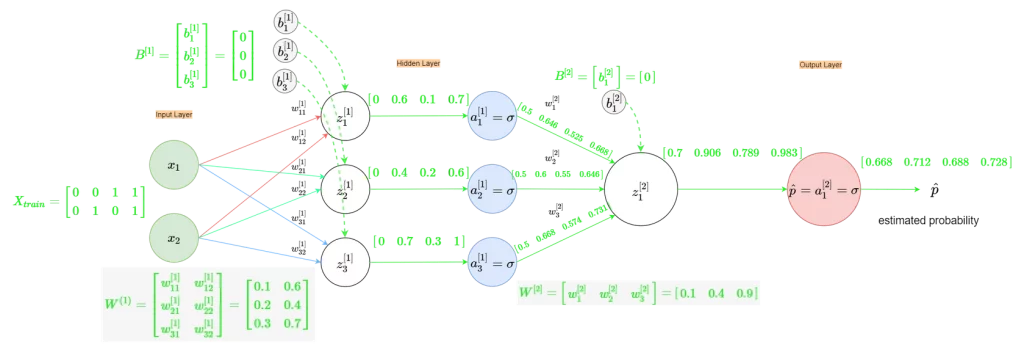

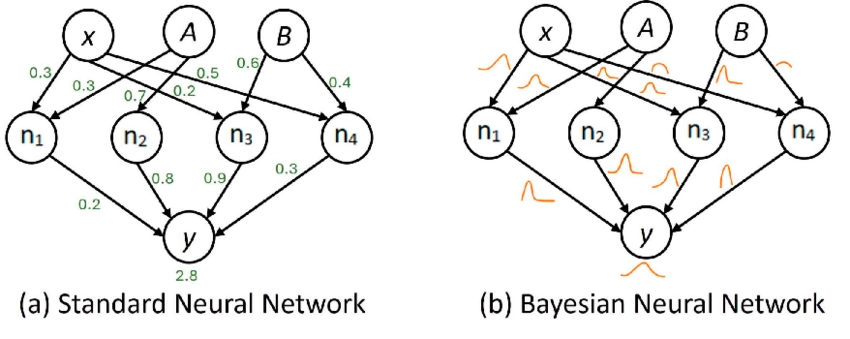

Binary Neural Networks (BNNs) are a variant of traditional neural networks that employ binary representations for weights and activations. Instead of utilizing floating-point numbers, BNNs constrain these values to either 0 or 1, effectively reducing the computational complexity and memory requirements. This binarization process is achieved through various techniques, such as deterministic or stochastic quantization methods.

Advantages and Disadvantages of BNNs

BNNs present several compelling advantages over traditional neural networks:

- Reduced Computational Complexity: Binary operations, such as XNOR and bitcount, are highly efficient and can be executed rapidly on modern hardware. This results in faster inference times and lower computational requirements, making BNNs well-suited for deployment on resource-constrained devices like mobile phones, embedded systems, and Internet of Things (IoT) devices.

- Memory Efficiency: BNNs require significantly less memory than their full-precision counterparts because they represent weights and activations as binary values. This memory efficiency is particularly advantageous for deep neural networks with millions or billions of parameters, enabling deployment on devices with limited memory resources.

- Energy Efficiency: The reduced computational complexity and memory footprint of BNNs translate into lower energy consumption, making them attractive for applications where energy efficiency is critical, such as mobile and IoT devices.

However, BNNs also have some inherent disadvantages:

- Potential Accuracy Degradation: The extreme quantization of weights and activations can lead to a loss of information and, consequently, a decrease in model accuracy compared to full-precision neural networks. Careful training and optimization techniques are required to mitigate this issue.

- Training Challenges: Training BNNs can be more challenging than traditional neural networks due to the non-differentiable nature of binary operations. Specialized training algorithms and techniques, such as straight-through estimators or approximations, are often employed to enable practical training.

- Limited Applicability: BNNs have shown promising results in specific domains, such as image recognition and natural language processing. However, their applicability may be limited in tasks requiring high precision or complex computations.

Despite these challenges, the potential benefits of BNNs in terms of efficiency and deployment on resource-constrained devices have fueled ongoing research efforts to address their limitations and broaden their applicability.

Applications of BNNs in Image Recognition

Image recognition is one of the primary application domains in which BNNs have demonstrated promising results. Their ability to perform efficient inference on resource-constrained devices makes them attractive for various computer vision tasks, such as object detection, image classification, and scene understanding.

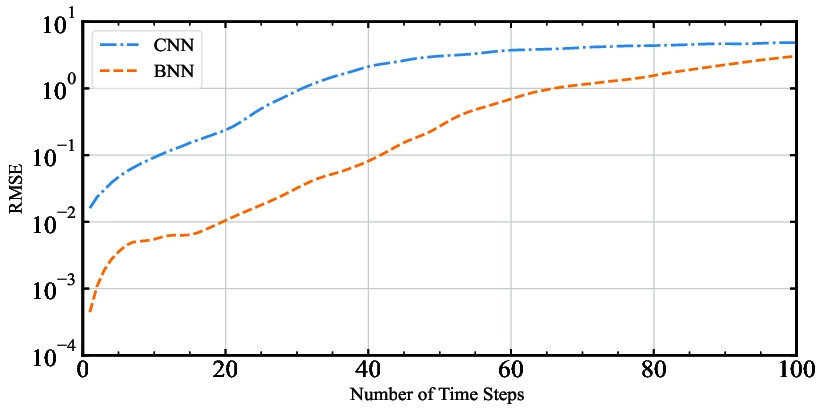

Researchers have explored using BNNs in popular image recognition architectures, including convolutional neural networks (CNNs) and residual networks (ResNets). By binarizing the weights and activations of these models, significant reductions in memory footprint and computational complexity can be achieved while maintaining reasonable accuracy levels.

One notable example is the XNOR-Net architecture, which replaces conventional convolutions with binary operations, enabling efficient inference on embedded devices. Other approaches, such as Bi-Real Net and PCNN, have also been proposed to improve the accuracy of BNNs in image recognition tasks.

BNNs in Natural Language Processing

While image recognition has been a primary focus for BNNs, their application in natural language processing (NLP) tasks has garnered significant attention. BNNs have shown promising results in tasks such as text classification, machine translation, and language modeling, where efficient inference is crucial for real-time applications or deployment on resource-constrained devices.

Researchers have explored various techniques to binarize popular NLP models, such as recurrent neural networks (RNNs), long short-term memory (LSTM) networks, and transformer architectures. These models can substantially reduce memory footprint and computational complexity by leveraging binary representations while maintaining reasonable performance levels.

One notable example is the Bi-BloGRU architecture, which employs binary weights and activations in a gated recurrent unit (GRU) network for language modeling tasks. Other approaches, such as BinaryNet and BinaryConnect, have also been applied to NLP tasks, demonstrating the versatility of BNNs across different domains.

Training and Optimization Techniques for BNNs

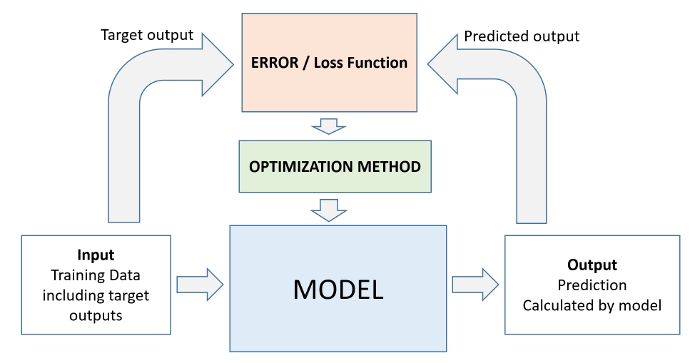

Training BNNs poses unique challenges due to the non-differentiable nature of binary operations. Researchers have developed various techniques to overcome these challenges and enable practical training of BNNs.

Straight-Through Estimator (STE): The STE is a widely used technique that approximates the gradient of the binarization function during backpropagation. By treating the binarization function as an identity function during the forward pass and computing the gradient as a straight line during the backward pass, the STE enables the training of BNNs using standard optimization algorithms.

Approximation Methods: Some approaches approximate the binary weights and activations using continuous functions or distributions instead of strict binary representations. These approximations allow for the computation of gradients and enable training using conventional optimization techniques. Examples include the Binary Connect method, which approximates the binary weights using a complex sigmoid function, and the Binary Net approach, which uses a stochastic binarization function.

Regularization Techniques: Various regularization techniques have been employed to mitigate the potential accuracy degradation caused by binarization. These include techniques like dropout, weight decay, and knowledge distillation, which can help improve the generalization performance of BNNs.

Specialized Optimization Algorithms: Researchers have also developed specialized optimization algorithms tailored for BNNs. These algorithms aim to address the unique challenges posed by binary representations and can improve the convergence and stability of the training process. Examples include the Binary Connect optimizer and the Binary Relax algorithm, which incorporate binary constraints into the optimization process.

Hybrid Approaches: Some methods combine binary and full-precision representations within the same neural network architecture. These hybrid approaches leverage the advantages of both representations, potentially improving accuracy while maintaining computational efficiency. Examples include the Bi-Real Net and the PCNN architectures, which strategically employ binary and full-precision components within the network.

By employing these training and optimization techniques, researchers have made significant progress in improving the accuracy and performance of BNNs, paving the way for their wider adoption in various applications.

Challenges and Limitations of BNNs

Despite the promising advantages and applications of BNNs, several challenges and limitations remain to be addressed:

- Accuracy Gap: While BNNs have shown promising results, there is often a non-negligible accuracy gap compared to their full-precision counterparts. Closing this gap remains an active area of research, with ongoing efforts to develop more effective training and optimization techniques.

- Generalization to Complex Tasks: BNNs have succeeded in specific domains, such as image recognition and natural language processing. However, their applicability to more complex tasks or domains with intricate representations still needs to be improved.

- Hardware Acceleration: While BNNs offer computational advantages in theory, fully realizing their potential requires dedicated hardware acceleration and optimization. Existing hardware architectures may need to be optimally designed for efficient binary operations, limiting the practical benefits of BNNs.

- Quantization Noise and Gradient Mismatch: The binarization process introduces quantization noise, which can adversely affect the training and convergence of BNNs. Additionally, the mismatch between the binarized forward pass and the approximate gradients during backpropagation can lead to suboptimal solutions.

- Lack of Interpretability: Like traditional neural networks, BNNs can need more interpretability to make their decision-making processes easier to understand and explain. This limitation may hinder their adoption in specific applications where transparency and interpretability are crucial.

Addressing these challenges requires continued research efforts, including developing more sophisticated training techniques and hardware optimizations and exploring novel architectures and approaches explicitly tailored for BNNs.

Comparison of BNNs with Traditional Neural Networks

To better understand the trade-offs and potential benefits of BNNs, it is essential to compare them with traditional full-precision neural networks:

- Computational Complexity: BNNs offer significant computational advantages over traditional neural networks due to efficient binary operations. This can lead to faster inference times and lower computational requirements, especially on resource-constrained devices.

- Memory Footprint: By representing weights and activations as binary values, BNNs require substantially less memory compared to full-precision neural networks. This memory efficiency is particularly advantageous for deep neural networks with millions or billions of parameters.

- Accuracy: BNNs have shown promising results but often exhibit a non-negligible accuracy gap compared to their full-precision counterparts. This trade-off between efficiency and precision is crucial when choosing between BNNs and traditional neural networks.

- Training Complexity: Training BNNs can be more challenging due to the non-differentiable nature of binary operations and the need for specialized training techniques. Traditional neural networks, on the other hand, can leverage well-established training algorithms and optimization methods.

- Flexibility and Expressivity: Full-precision neural networks offer greater flexibility and expressivity, as binary representations do not constrain them. This can be advantageous for tasks that require high precision or complex computations.

- Hardware Compatibility: While BNNs offer theoretical computational advantages, fully realizing their potential may require dedicated hardware acceleration and optimization. Traditional neural networks can often leverage existing hardware architectures and libraries more efficiently.

The choice between BNNs and traditional neural networks ultimately depends on the application’s specific requirements, such as the trade-off between efficiency and accuracy, the available computational resources, and the complexity of the task at hand.

Prospects and Developments in BNN Research

As the field of BNNs continues to evolve, several promising research directions and prospects emerge:

- Improved Training Techniques: Developing more effective and efficient training techniques for BNNs is an active area of research. This includes exploring novel optimization algorithms, regularization methods, and hybrid approaches that combine binary and full-precision representations.

- Hardware Acceleration and Optimization: Realizing the full potential of BNNs requires dedicated hardware acceleration and optimization. Efforts are underway to design specialized hardware architectures and accelerators tailored for efficient binary operations, potentially unlocking significant performance gains.

- Theoretical Foundations: Deepening the theoretical understanding of BNNs, including their expressive power, generalization capabilities, and convergence properties, can provide insights for developing more robust and effective architectures and training algorithms.

- Domain-Specific Applications: While BNNs have shown promise in areas like image recognition and natural language processing, exploring their applicability in other domains, such as speech recognition, time series analysis, and reinforcement learning, can open new avenues for efficient and resource-constrained AI solutions.

- Interpretability and Explainability: Enhancing the interpretability and explainability of BNNs is an important research direction, particularly for applications where transparency and understanding of the decision-making process are crucial.

- Hybrid and Adaptive Approaches: Combining the strengths of BNNs and traditional neural networks through hybrid or adaptive architectures could lead to more efficient and accurate models, leveraging the advantages of both approaches.

- Quantization-Aware Training: Developing techniques for quantization-aware training, where the quantization effects are explicitly considered during the training process, could help mitigate the accuracy degradation observed in BNNs.

As research in BNNs progresses, we can expect to see more efficient and accurate models, tailored hardware solutions, and broader applications across various domains, further advancing the field of artificial intelligence.

Read Also: The Role of Coding in Cybersecurity Careers

Frequently Asked Questions (FAQs)

What are Binary Neural Networks (BNNs)?

Binary Neural Networks (BNNs) are a variant of traditional neural networks where weights and activations are represented as binary values (0 or 1) instead of floating-point numbers. This binarization process reduces computational complexity and memory requirements, making BNNs attractive for deployment on resource-constrained devices.

What are the advantages of BNNs?

The main advantages of BNNs include reduced computational complexity, memory efficiency, and energy efficiency. Binary operations are highly efficient, and representing weights and activations as binary values significantly reduces memory requirements, enabling deployment on resource-constrained devices.

How do BNNs compare to traditional neural networks in terms of accuracy?

While BNNs offer computational and memory advantages, they often exhibit a non-negligible accuracy gap compared to their full-precision counterparts. Careful training and optimization techniques are required to mitigate this accuracy degradation.

What are some typical applications of BNNs?

BNNs have shown promising results in applications such as image recognition, object detection, natural language processing tasks (e.g., text classification, machine translation), and language modeling. Their efficiency makes them attractive for deployment on resource-constrained devices in these domains.

How are BNNs trained?

Training BNNs poses unique challenges due to the non-differentiable nature of binary operations. Techniques like the Straight-Through Estimator (STE), approximation methods, regularization techniques, and specialized optimization algorithms are employed to train BNNs effectively.

Conclusion

Binary Neural Networks (BNNs) have emerged as a promising paradigm in the quest for efficient and resource-aware artificial intelligence solutions. By leveraging binary representations for weights and activations, BNNs offer significant computational and memory advantages, making them well-suited for deployment on resource-constrained devices.