Latest Added

Deals and Reviews

This Week's Popular In DTT

-

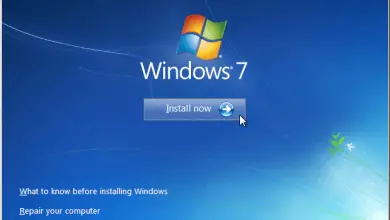

No Boot Device Windows 7

Imagine this: you sit at your computer, ready to get some work…

-

What is Debian? A Comprehensive Guide to Understanding Debian Linux

Debian is a free and open-source operating system for computers, servers, and…

-

Plc Artificial Intelligence: Advancements and Applications

PLC Artificial Intelligence (AI) is a technology that enables Programmable Logic Controllers…

-

Cyber Security Masters Europe: Top Programs and Requirements

Cybersecurity is an increasingly important field in the world of technology. With…

-

UCLA Artificial Intelligence Course: Overview and Benefits

Overview of UCLA Artificial Intelligence Course Ucla Artificial Intelligence Course: Overview And…

-

Sneaking in Cyber Security: Tips for Keeping Your Business Safe Online

Sneaking in cyber security is a growing concern for businesses and individuals…

-

Cybersecurity Honor Society: Recognizing Excellence in Cybersecurity

The Cybersecurity Honor Society is a prestigious organization that recognizes students who…

-

Smart Grid Cyber Security: Protecting the Future of Energy

Smart Grid Cyber Security is a critical topic in the energy sector…

-

Master the Steps: Install aaPanel on Linux Efficiently

Are you tired of manually configuring your server settings every time you…

Most Read

-

50 Secret Google Ranking Factors for 2023, Make Easy to Rank

Search Engine Optimization (SEO) is influenced by a multitude of factors. Addressing…

-

Fiction Books About Artificial Intelligence: A Comprehensive List

Fiction books about artificial intelligence have become increasingly popular in recent years.…

-

Digital Forensics in Cybersecurity C840: Understanding the Importance of Digital Evidence Analysis

Digital Forensics in Cybersecurity C840 is an important course that covers various…

-

NFT Business opportunities

The NFT Business is the latest topic that is sweeping the internet…

-

install aaPanel on a VPS: A Beginner’s Guide to Setting Up Your Website

Are you tired of manually setting up and managing your VPS? Look…

-

Data Science: The Key to Unlocking Business Insights

Understanding Data Science Data Science: The Key To Unlocking Business Insights Data…

-

Search Arbitrage with Native Ads: Maximizing Your Ad Revenue

Search arbitrage with native ads has emerged as a powerful marketing strategy…

-

Artificial Intelligence SVG Generator: Creating Dynamic Vector Graphics with Ease

Understanding Artificial Intelligence Svg Generator Artificial Intelligence Svg Generator is a tool…

-

Motorola One Action: A Complete Review of Features, Pros, and Cons

Looking for a balanced review of the Motorola One Action? We break…